0%

Featured

Featured

A Guide on How to Improve Your Website's Search Indexation

5 Min Read

A Guide on How to Improve Your Website's Search Indexation

Website indexing is a process search engines use to understand the purpose of your website. It helps Google find your website, add it to its index, associate each page with searched topics, return this site to the SERPs, and ultimately drive users that match the intent of your web pages. Think about how an index works in books: it records valuable words and information that provides more context about a subject. That's what website indexing does in the context of search engine results pages. What you see on a search engine isn't the live internet. It's the search engine's index of the internet, like an interactive picture. This information is essential because not every page you publish online will get a search engine's attention. As a website owner, you need to do a few things to get it added to the Google index. Google indexes websites that have a few key components:- Aligned with popular searches.

- Easily navigated to form the website's homepage.

- Linked to other pages on and off your site's domain.

- Not being "blocked" from indexation.

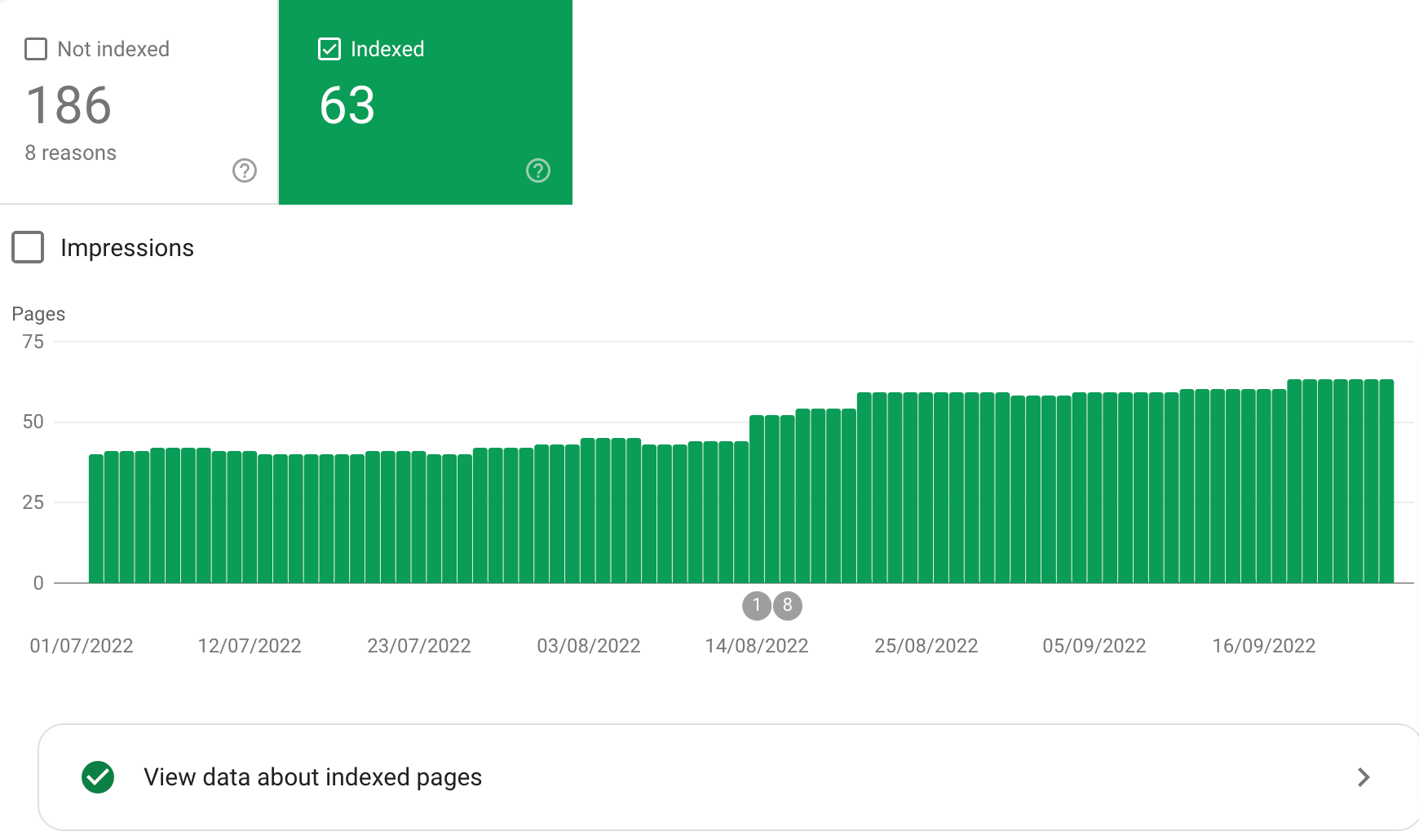

Use Google Search Console

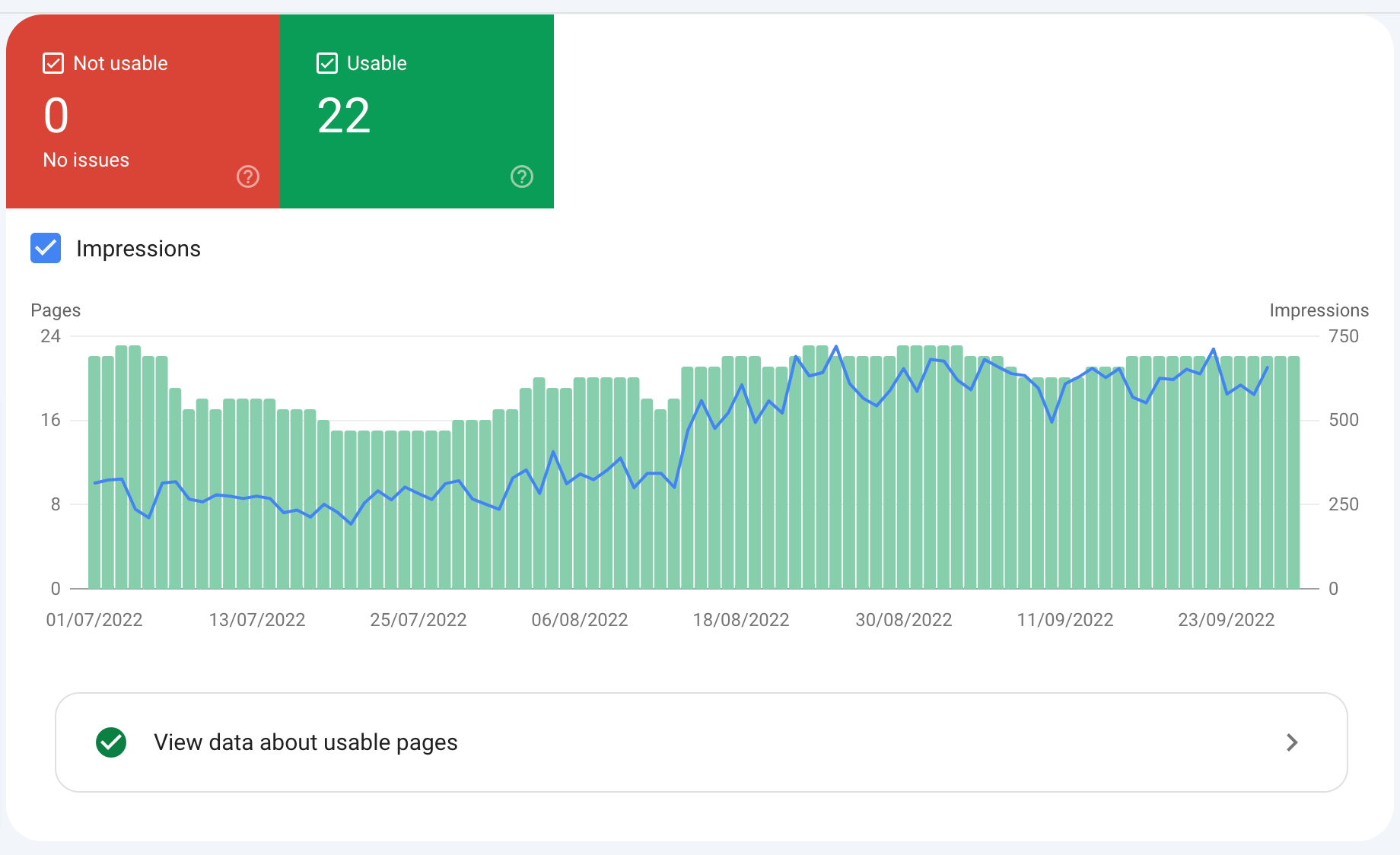

Errors in your crawl status could indicate a deeper issue on your site. Check your crawl status to identify potential errors impacting your site's overall performance. Navigating to Google Search Console on the sidebar, you'll be able to check your sitemap status and the status of your page indexation, as shown below:

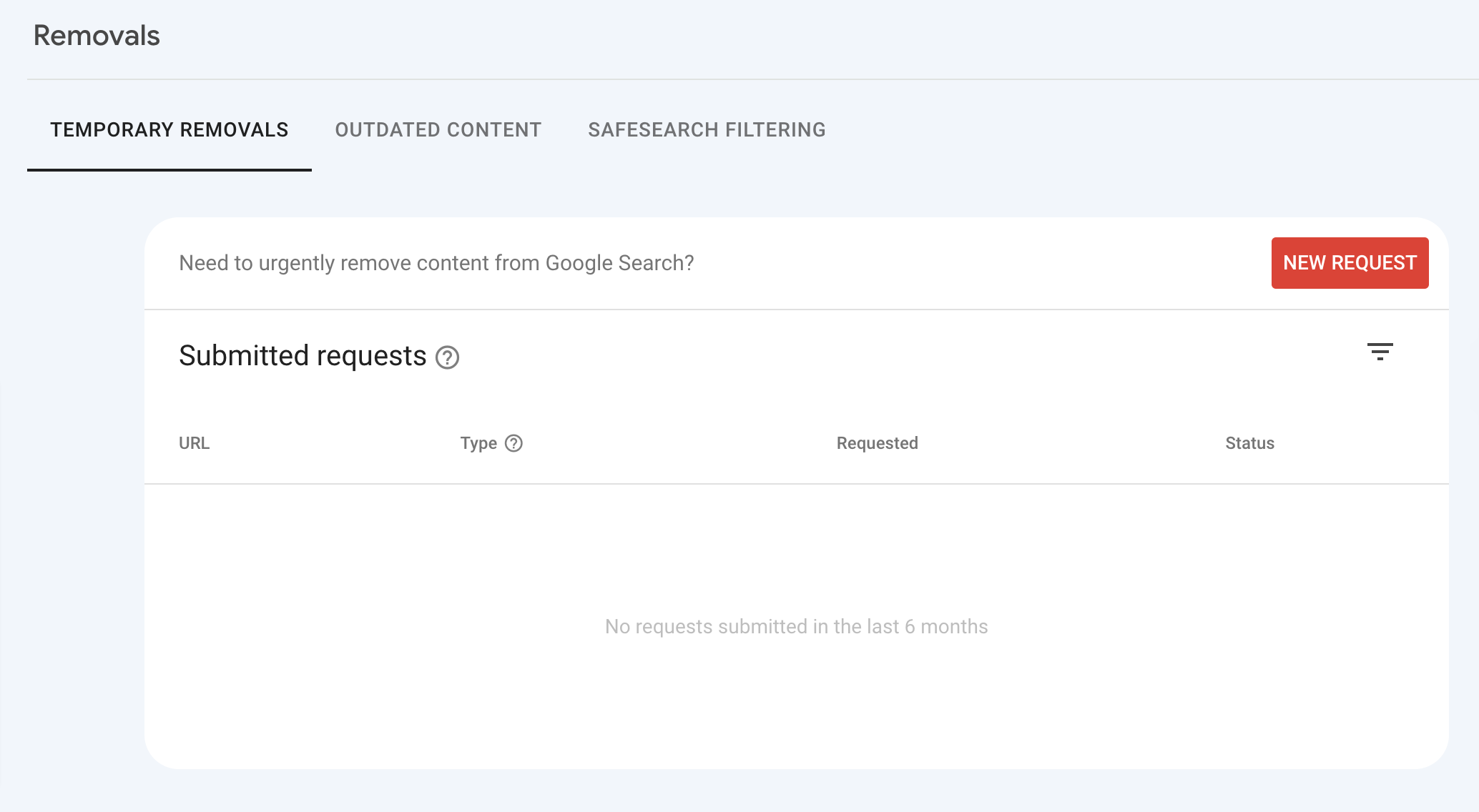

If you want to revoke access to a specific webpage, you can tell Search Console directly using the Removals tab, as shown below:

If you want to revoke access to a specific webpage, you can tell Search Console directly using the Removals tab, as shown below:

This use of Google Search Console is helpful if a page is temporarily redirected or has a 404 error. However, it is vital to remember that if you set up a 410 parameter, it will remove a page permanently from the index, so be careful.

This use of Google Search Console is helpful if a page is temporarily redirected or has a 404 error. However, it is vital to remember that if you set up a 410 parameter, it will remove a page permanently from the index, so be careful.

Mobile-Friendly Pages

With the arrival of the mobile-first index in 2020, we must optimise all web pages to display mobile-friendly copies for the mobile index. A desktop version will still be indexed and displayed on mobile if a mobile-friendly version does not exist. Unfortunately, your rankings may suffer as a result. Many technical tweaks can instantly make your website more mobile-friendly, including:- Implementing responsive web design.

- Inserting the viewpoint meta tag in the content.

- Minifying on-page resources (CSS and JS).

- Tagging pages with the AMP cache.

- Optimising images to improve the load time.

- Compressing and Reducing the size and use of UI enhancements.

It is also essential to run your site through Google PageSpeed InsightsSpeed is an important ranking factor; it can affect the speed at which search engines can crawl your site.

It is also essential to run your site through Google PageSpeed InsightsSpeed is an important ranking factor; it can affect the speed at which search engines can crawl your site.

Continuously Update Content

Search engines like Google will crawl your site more often if you produce new content regularly. Regularly producing content will signal to search engines that your site is constantly improving and publishing new content. Therefore, Google, or other search engines, must crawl it more often to reach its intended audience.Submit Your Sitemap

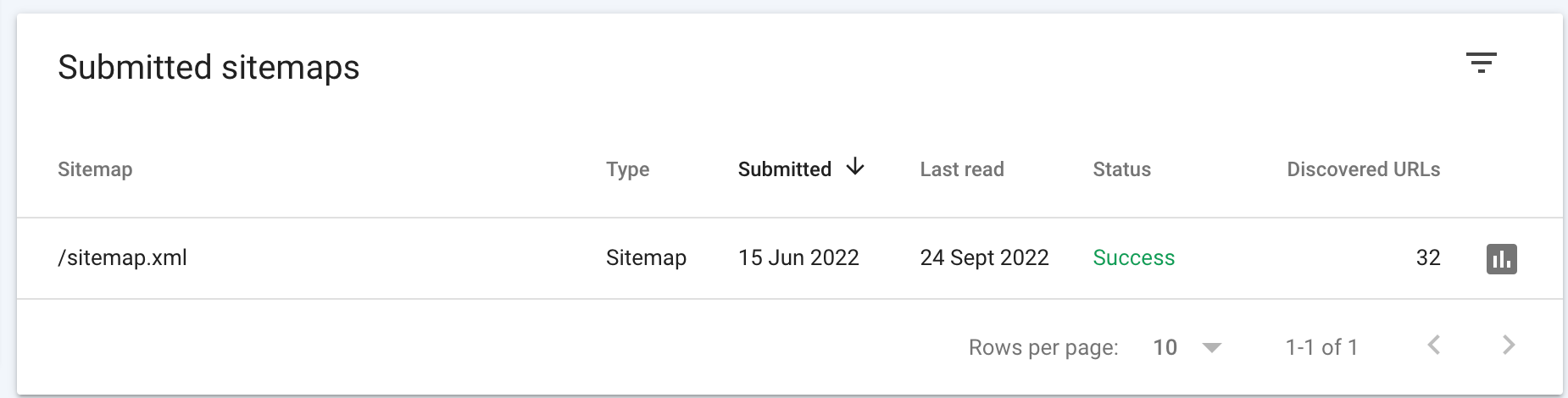

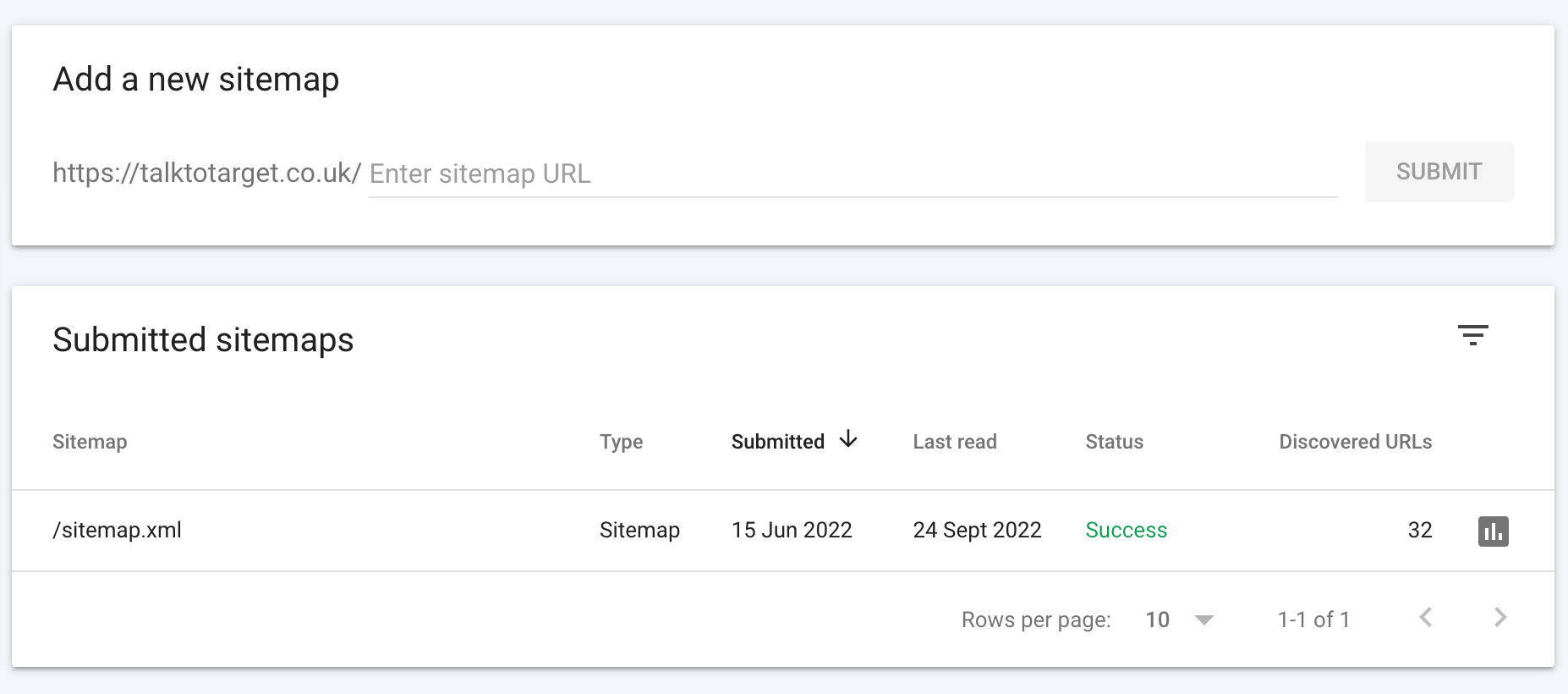

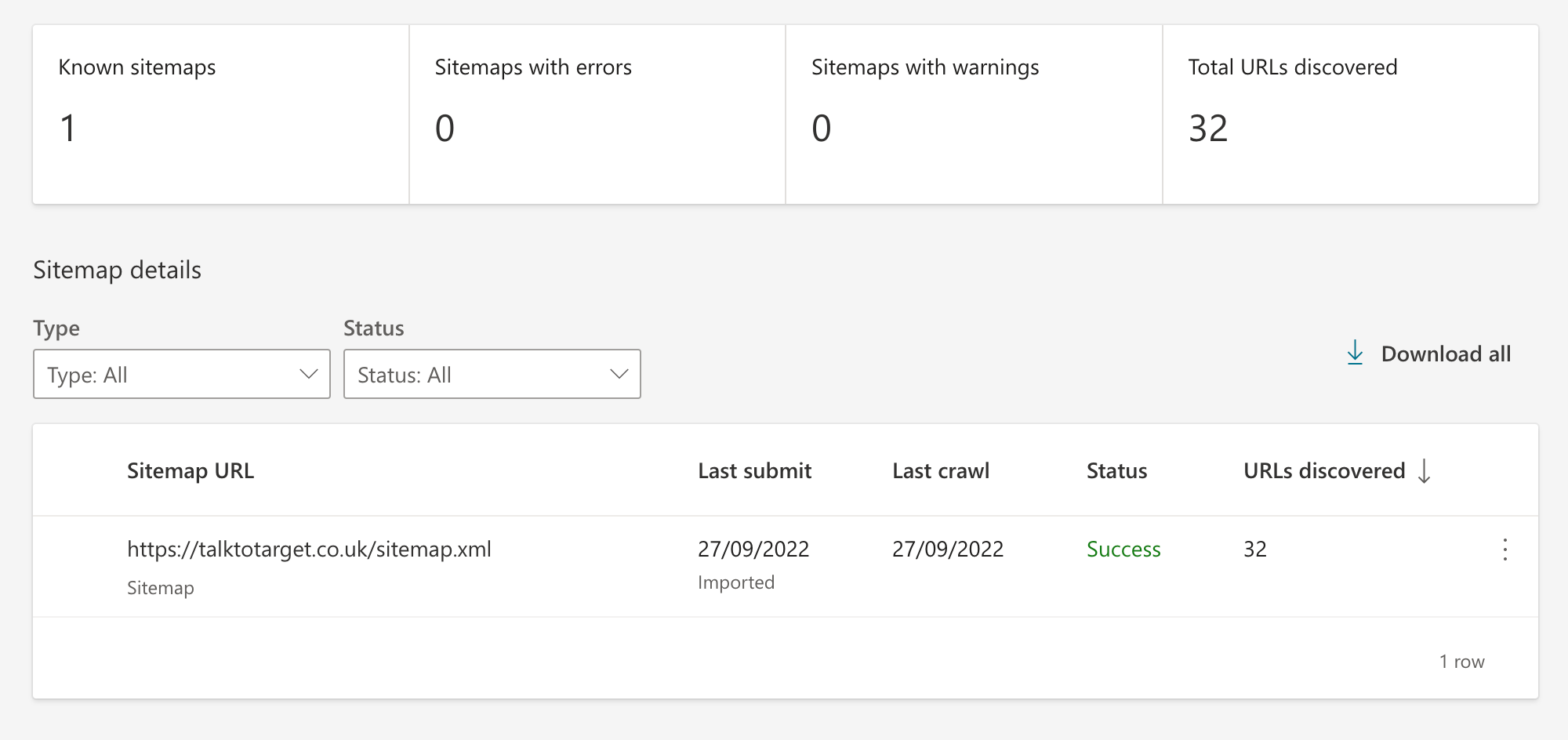

One of the best tips for indexation is to submit a sitemap to Google Search Console and Bing Webmaster Tools, as shown below:

You can create an XML version using Screaming Frog or manually create one in Google Search Console by tagging the canonical version of each page that contains duplicate content.

You can create an XML version using Screaming Frog or manually create one in Google Search Console by tagging the canonical version of each page that contains duplicate content.

Ensure Proper Website Architecture

Establishing consistent information architecture is crucial for your website to be indexed and properly organised. Creating main service categories where related webpages can sit can help search engines properly index webpage content when the intent is unclear. An example is using Parent Service Pages with Child Pages sitting underneath the parts of that Service, e.g. SEO Consultancy Services (Parent Page) with eCommerce SEO or Local SEO (Service Child Page). You can also view your Website Architecture using a Graphic Tree Map of the website's URL structure using Screaming Frog, crawling the website, and then navigating to Reports and Directory Tree Graph.Deep Link To Orphaned and Isolated Page

If a webpage on your site or a subdomain is isolated or an error prevents it from crawling, you can get it indexed by acquiring a link on an external domain. This action mentioned above is a beneficial strategy for promoting new content on your website and getting it indexed quicker. Beware of syndicating content to accomplish these links, as search engines may ignore syndicated pages, which could create duplicate errors if not correctly canonicalised. You can also bring these pages out of isolation using internal links to the higher-level pages on your website, allowing search engines to find them by following the links through the crawl process.Minify Unnecessary Elements to Increase Load Times

Asking search engines to crawl large, unoptimised, uncompressed images will eat up your crawl budget and prevent your site from being indexed as often as necessary. Search engines also have difficulty crawling certain backend elements of your website. For example, Google has historically struggled to crawl JavaScript. In addition, specific resources like Flash and CSS can perform poorly on mobile devices and eat up your crawl budget. Ignoring this advice can be a no-win scenario where you can sacrifice page speed and crawl the budget for obtrusive on-page elements for design's sake. Enforce your webpages' optimisation for speed, especially over mobile, by minifying on-page resources, such as CSS. You can also enable caching and compression to help spiders crawl your site faster.Fix Noindex Pages

Sometimes it may make sense to implement a noindex tag on pages only meant for users who take a particular action, like entering a specific landing page. Regardless, you can identify webpages with noindex tags that prevent them from crawling by using a tool like Screaming Frog. If your site is on WordPress, the Yoast plugin allows you to easily switch a page from index to noindex, making it accessible without relying on a development team.Set A Custom Crawl Rate

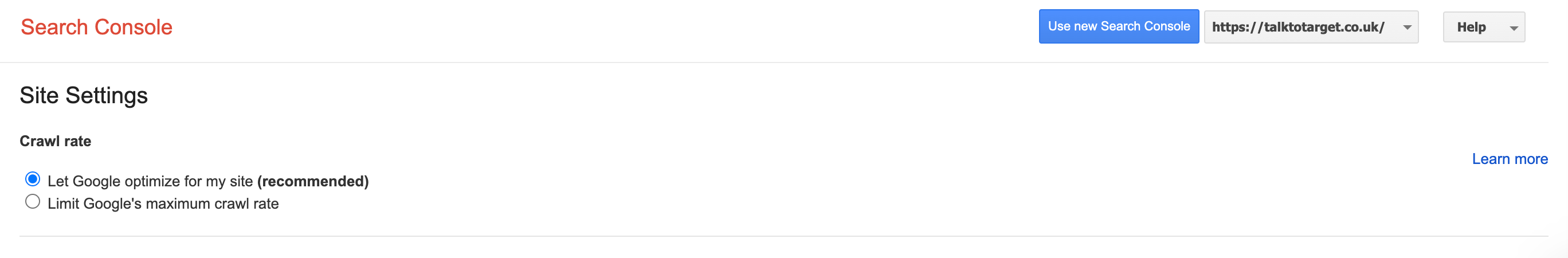

Google Search Console, you can slow or customise the speed of your crawl rates, as shown below:

Eliminate Duplicate Content

Massively duplicated content can significantly slow your crawl rate and increase your crawl budget. These pages are simple to find using a tool such as Semrush, which provides a Site Audit that will highlight Duplicate Pages and Content Issues. You can eliminate these problems by blocking these pages from indexing or placing a canonical tag on the page you wish to be indexed. Along the same lines, it pays to optimise the meta tags of each page to prevent search engines from mistaking similar pages as duplicate content in their crawl. To do this, please ensure that individual primary/ focus keywords are only used for one page on your site to avoid this issue.Block Pages You Don't Want Spiders To Crawl

You want to prevent search engines from crawling a specific page. You can do this by:- First, place a noindex tag using a plugin like Yoast.

- Then, put the URL in a robots.txt file.

- Deleting the page altogether.